Stable Diffusion: A Quick Tour

In the past two weeks, something incredible happened in the machine learning space: an open-source image generation model that can compete or even outperform closed-source alternatives such as OpenAI’s DALL-E or Google’s Imagen has been released to the public. This development generated—no pun intended—an unprecedented wave of excitement and buzz in the space. You don’t have to take my word for it.

Developed by Stability.Ai, Stable Diffusion can generate highly detailed images from simple text prompts. But the real novelty lies in the fact that it can do so on consumer-grade graphic cards. Prior to this, most latent diffusion models, as they are called, required much more powerful machines to do their work.

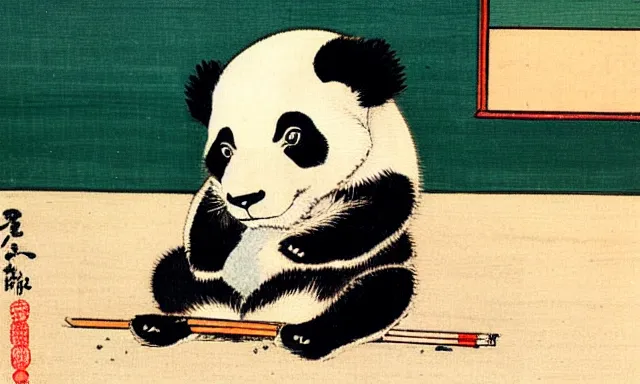

To illustrate why this is a big deal, here is one of the images1 my 5 years old GTX 1080 graphics card generated for the prompt “A photo of a panda sitting in a primary school classroom”:

“A photo of a panda sitting in a primary school

classroom”

“A photo of a panda sitting in a primary school

classroom”

The model managed to capture the meaning of the prompt and even nailed the focus blur for that perfect photograph feel. The fascinating part is that even small variations in the prompt or the parameters produce widely different results. For instance, consider the following prompt-output combination.

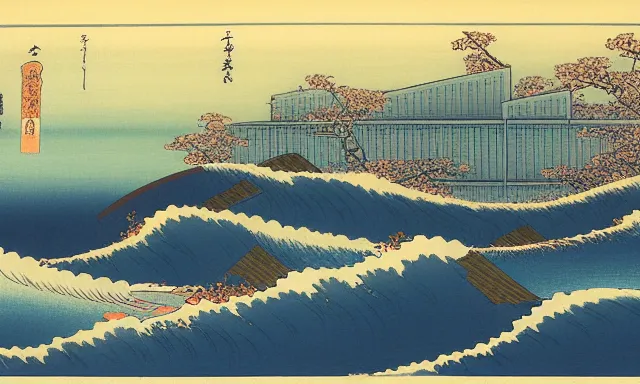

“A painting by Katsushika Hokusai of a panda sitting in a primary school

classroom”  “A painting by

Katsushika Hokusai of a panda sitting in a primary school classroom”

“A painting by

Katsushika Hokusai of a panda sitting in a primary school classroom”

Not only did the model recognize and reproduce the style of the famous Japanese artist, but it managed to do so while capturing the historical context by eliminating all modern details from the image. If we try with a completely different prompt that purposefully includes words like “cyberpunk” and “futuristic”, it still does its best to stay faithful to the aesthetics of the Ukiyo-e art form.

“A painting by Katsushika Hokusai of a cyberpunk futuristic city”  A painting by Katsushika Hokusai of a cyberpunk

futuristic city

A painting by Katsushika Hokusai of a cyberpunk

futuristic city

But the use cases of Stable Diffusion don’t stop here. It can generate images based on other images (image-to-image), fill empty pixels inside an image (inpainting), fill empty pixels outside an image (outpainting), interpolate between images, and so forth. These building blocks open the door to large swaths of applications in more than one creative field.

Image-to-image generation is particularly powerful as it allows the user to have a much bigger say in the final output, eliminating the guesswork and making the prompt writing process much easier. To illustrate, consider this example below where I started with a crude manual drawing to the left, added the prompt “a rainbow-colored umbrella”, and ended with the generated image to the right (granted after a half a dozen tries).

Left: input image. Right: Image generated by

Stable Diffusion.

Left: input image. Right: Image generated by

Stable Diffusion.

How to Get Started

Being an open-source model, it took only a couple of weeks for a barrage of wrappers, forks, GUIs, and CLIs to emerge. Unfortunately, the tooling can still be arcane to most people, even developers not familiar with machine learning. There is a non-trivial amount of fiddling with python environments and other dependencies to get the intended results.

The most straightforward way to take SD for a spin is via the official DreamStudio web-based interface. You get 200 credits when you create a new account, where each credit corresponds to one 512px by 512px image. Another option is via the official Discord server which features a bot command that parses prompts and displays the generated images directly in the channel.

If you’re familiar with Python tooling or just feeling adventurous, and have a computer with enough GPU juice to run the model2, this fork has more thorough setup instructions than many others I tried.

Alternatives

As it stands now, Stable Diffusion is the only open-source latent diffusion model around. Alternatives in the closed-source side of the fence include the buzz-worthy Midjourney discord server, which seems to excel at generating stunning artwork using very minimal prompts; something SD still needs a bit more help with in my tests using the v1.4 model weights.

DALL·E is the other commercial alternative—albeit only for those who got access to the closed beta.

Caveats

Generating images that are jaw-droppingly good takes a lot of iterations, prompt engineering, and parameter adjustments. It’s quite rare for the first image to match what you had in mind when you wrote the prompt. The model also seems to have trouble with human limbs and fingers, often drawing more or less than necessary. Anatomy in general seems to be a hit or miss. Another shortcoming is the often-incorrect interpretation of relations between various objects and actors in the prompt.

That being said, it’s been only a couple of weeks, and these early limitations are already on the radar of the Stability Ai team and the community.

The other aspect to consider is the ethical one. Using text-to-image to replicate the style of living artists without their permission is not only possible but on the way to become the norm. The model is also as biased as the data it was trained on. The good news is that SD can be retrained by anyone invested enough on more curated datasets to minimize these issues.

Personal Thoughts

Initially I was very skeptical of letting machines meddle with art, especially when they are developed by for-profit companies behind the curtains. But as the novelty started wearing off, I slowly realized that these tools aren’t meant to replace humans as many skeptics, including myself, tend to believe. For one, these models are far from perfect. The generated images are often distorted or comically incoherent. Even if these tools improve, they are never going to make art on their own or compete with humans when it matters.

One analogy I heard that made a lot of sense is how photography never replaced artists as some were concerned about. Neither did printing before it. In the best case scenario, AI-generated “art” is going to be accepted as its own sub-field. In the worst case, this technology is going to be nothing more than yet another tool for people, artists included, to work with.

As someone who always enjoyed drawing—and made a living from it at some point—using Stable Diffusion won’t stop me from reaching out to my pencil and sketchbook (or iPad these days). In fact, I’m already enjoying how it augmented my work by means of inspiration, quick experimentation, and fast turnaround. Taking my rough sketches and storyboards to the next level is all of a sudden much less time consuming than it ever was.

Granted, this is a new frontier and it remains to be seen how things will evolve from here. I am relatively optimistic this time around.

Learn More

Since the public release, it’s been hard to run out of resources, guides, and tools related to SD. And quite frankly, it’s overwhelming for someone new to machine learning like myself. But if you’re curious about the topic and would like to learn more, you might find my growing collection of links of some use!

Last but not least, why bother with closing words when we can do closing images? Enjoy!

“A Kawase Hasui painting of a UFO in the icy landscape of Antarctica”  A Kawase Hasui painting of a UFO in the icy

landscape of Antarctica

A Kawase Hasui painting of a UFO in the icy

landscape of Antarctica

“A kid’s drawing of the pyramids”  A kid’s drawing of the pyramids

A kid’s drawing of the pyramids

“An intricate painting of a cucumber, renaissance style”  An intricate painting

of a cucumber, renaissance style

An intricate painting

of a cucumber, renaissance style